Browser is the operating system for the web, agree? “HTTP” headers can, let the browser know the guideline it should follow while serving response from a web app. What it does is to protect the users from many of user centric attack. I have mentioned it as “Guideline” this is because the fact is, to follow the headers set in web server is totally up to the browser. Browser decides to honor the request or not. Worsening it, different browser has different rules. And an attacker will not be using a browser. If she does, it will not honor these guidelines. We have a need to force these guidelines as a standard.

Web application can be companies internally developed one. Or it can be a web application from external source. It can be open-source or closed source with later having limitation to modification, enforced via EULA. What will happen as a security professionals finds out an application vulnerability, Inform developer? Yes. But how far it helps in fast solution.

Expecting a developer to prioritize security like us while developing application is asking for too much. Their priorities are different as far I have seen. When Security aspect are considered in SDLC development or release schedule gets impacted, which is not ideal of anyone. We are in a competitive world one who comes first will have advantage. I do recommend security aspect in every point development life-cycle. As wisdom goes, “Security should be an enabler”!

How can a security professional help with problem web application face? What if web application a security nightmare?

Enforce protection so no one can bypass it; We can use a Web Application Firewall, Security related to the web application ideally be handled by this firewall. Other layer 3 or 4 attacks should be met by IDS or IPS which work on those layers. Web application firewall protects anything served via HTTP. It will have visibility to 4 phases of HTTP transaction, request header, request body, response header, response body.

Web application firewalls works on two modes embedded and proxy. Cloudflare WAF is an example of proxy firewall. Modsecurity running on the same web server can be called embedded, modsecurity can also function as a proxy WAF. There are certain benefits and limitation for both mode. But embedded mod always wins and give much flexibility to us.

Likes of Cloudflare, Barracuda and many more, are commercial and expensive(depends on other factors) options. And one prominent open-source option is modsecurity. Being open-source and excellent tool one have. Most recommendation if any follows, is based on it.

When a client(EG- a browser) initiate a connection, It sent in the request headers. Then it sent the request body and server responds with response header and body. An embedded firewall has the visibility on to every phase of the client communication, including the server and application logs. It can intercept on any phase of HTTP request and response. This gives the ability to create an extensive and effective protection against any attack that comes toward our server. One exemption to this would be, modsecurity itself is a module in Apache, any vulnerability with Apache software or could say anything loaded before modsecurity, cannot be protected.

My favorite method is start with full denial. Don’t trust anything that application accepts. Keep the WAF in detection mode(don’t block). Let it run in this mode for a few days or weeks. A good timeline would be a month. This will help us to get a more complete baseline.

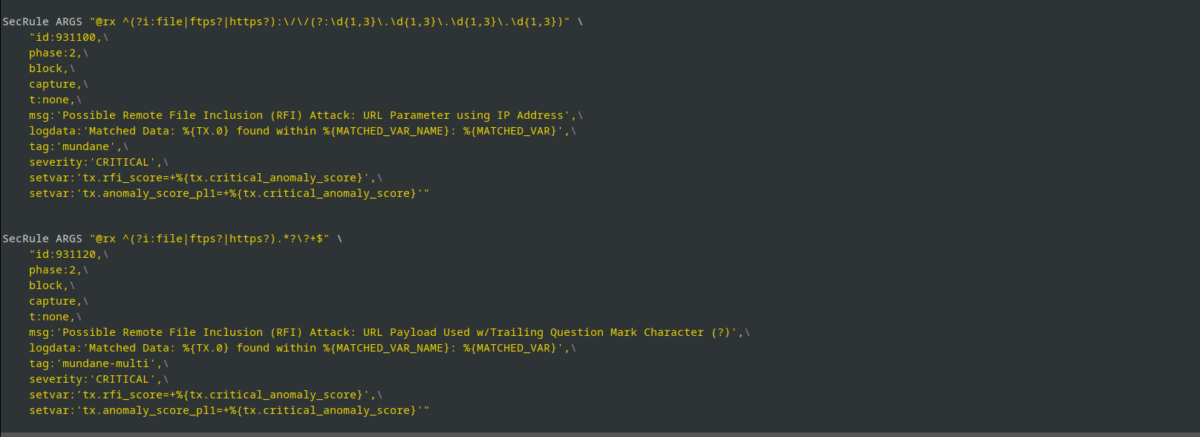

Now the rule creation; after considering the logs for a month(could be huge, use your scripting skills) we would know what’s the ARG are, what’s the URLs, what all data are accepted by these ARG’s. Creating rules like this is an extensive task. But once done you will know what your environment is capable of and prevent future attacks easily and with confidence. More importantly gain valuable knowledge.

Modsecurity has free rules; written by “experts” hosted by OWASP. It’s called core rule set(CRS). There are 200 plus rules with different intensity for blocking, named paranoia level. Default to 1 for most install and goes up to 4 for advance protection. There is a downside to this, there could be many false positives and false negatives. Exclusion for CRS can be written easily and this way should be considered for beginner and time constrained installations. Keep in mind that, your exclusion shouldn’t help attacker to bypass CRS. For advanced users, adapt from CRS take rules logic for likes of SQLI, RCE, etc modify it and make it your rule.

Persistent storage; can be used to track across multiple transactions. We want to track a brute force attack; we can use the persistence to identify the IP and geolocation and create a correlation. Session management; most application creates a cookie after successful authentication which acts like authorization token for the successfully authorized credential, so users don’t have to re-login on every page. We have to protect this cookie, more so if session management in application is flawed. We can use the persistence to track cookie with IP address, user agent, and combined with geodata. Any illegal activity detected by rule we can invoke a sign out which invalidates the authorized cookie.

With embedded WAF we have a feature to enable dynamic security assessment and virtual patching, What dynamic assessment does is recognize vulnerability by analyzing the response, this way we detect vulnerability way before it gets detected and exploited. When a vulnerability is found it need to be patched, patching the source code will take time and it could affect the current release cycle. Patching it ourselves is not recommended and won’t be allowed in most cases. Now the best and less time-consuming way is using virtual patching. Way we go with it is identifying the vulnerable URL and identify the vulnerability, create a rule to fix that vulnerability. By this way we can virtual patch the vulnerability and give time for developer to work on a patch. Peace of mind for both.

Any security process there is need for visibility. EG- What all attack happened in a month Is there an increase in attack. Why, when and how are essential for an effective threat modeling. Most medium to big company will have a centralized logging more or less stipulated by regulatory requirements. Keep in mind, we need to send the WAF logs to central logging server with integrity, confidentiality and availability. Centralized log server very well could be elastic kibana or something much simpler or much more complex than both.

As for final thoughts, Modsecurity doesn’t have intelligence built-in. It does what we tell it to do, That’s the beauty of modsecurity. We can create rules with persistence storage to give some form of dumb intelligence. In real scenario we need a bit more. We might need to know about emerging threats, and we should be prepared before it hits our server. Two ways I achieve this is using SNORT emerging threats rules converted and loaded to modsecurity by LUA or python script. And second one by using google safe browsing data within the rule to determine the outcome.

We should have visibility to what we are protecting. Control over every phase of web application communication. Enforce rules which are relevant to your installation. Track everything, obfuscate critical information from logs like credit card, PII and passwords. Don’t trust anything, consider everything as possible attack.

Attacker has nothing to lose, everything to gain. Defenders have everything to lose, nothing to gain.

GiBu GeorGe